Project Summary

Introduction

Neuroscience is the scientific study of the nervous system, a complex organ system inside every animal on planet Earth. Since its birth as a distinct scientific discipline in the 1950s and 1960s, neuroscience has concentrated its efforts on “interventionist” laboratory experiments with mice and rats, in which the experimenter manipulates some aspect of the nervous system and carefully observes the effects of that manipulation. This method has been very productive for the last 60 years, and has motivated the rapid development of ever more precise tools for physical and chemical manipulation of nervous system cells and molecules. Unfortunately, these techniques have not been enough to meaningfully connect the cellular and molecular activity of nervous systems to the behaviour and well-being of whole organisms.

Several clues point to a need for neuroscience to expand its work out of the laboratory setting and “into the wild”. Nervous systems evolved for millions of years into countless forms and in unknown, unpredictable environments before we built laboratories; yet, the vast majority of brains we currently study are from only a few species which have been bred for generations in laboratory settings. We are discovering that the differences between an anaesthetized brain, a brain in a restrained animal, and a brain in a freely moving animal can be profound, even when studying neuroscience topics other than movement. We are also discovering that nervous systems are incredibly sensitive to the details of both their immediate context and their past history. These clues, and more, have motivated me and my colleagues in the Intelligent Systems Lab to search for ways to validate laboratory results and techniques under more natural conditions and with larger, more diverse samples of research subjects.

“Surprising Minds”: an experiment embedded in an exhibit

While looking for collaborators with whom I could study neuroscience topics in cuttlefish, I developed a relationship with the Sea Life Centre in Brighton, UK, during the fall of 2016. I agreed to volunteer my time learning their marine animal husbandry procedures by helping with the daily tasks of maintaining the Behind the Scenes research laboratory space. In return, I would be allowed to conduct non-invasive behavioural experiments using their aquarium space, with newly hatched animals that the aquarium staff would help me raise. Unfortunately, no local cuttlefish eggs were found during the breeding seasons of 2017, and during the summer of 2017 a fault was found in one of the aquaculture systems, and the cuttlefish tanks had to be taken offline for repairs.

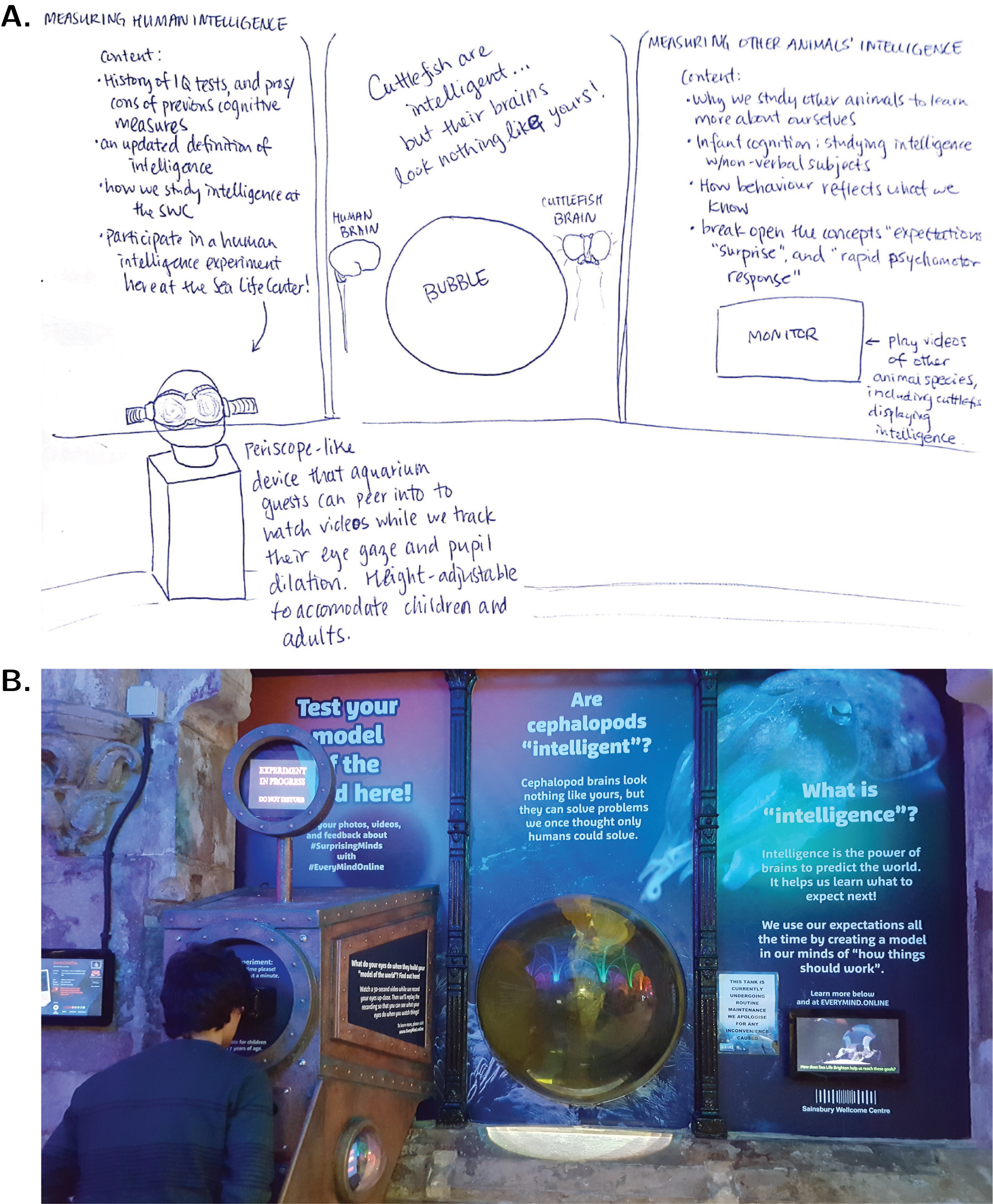

When it became clear that I would not be able to proceed with cuttlefish behaviour experiments as planned, my PhD advisor, Dr. Adam Kampff, encouraged me to propose a behaviour experiment for humans which could take advantage of the huge quantity and diversity of people visiting the Sea Life Centre, especially during the summers when school groups from all around the world visited the aquarium. After meeting with Sea Life Brighton’s display team and the communications manager at our London host institute, the Sainsbury Wellcome Centre, I was able to secure space and funding to develop and build an exhibit about intelligence, surprise, and how we can study neuroscience in both humans and cuttlefish.

We decided to structure the experiment around eye-tracking, for two reasons. One is that both humans and cuttlefish have fluid-filled, camera-like eyes, and pay much more attention to what we see than to information from any other sense/sensor. The second reason is because changes to pupil size in response to light, surprise, and cognitive load have been well-studied under traditional laboratory conditions. We felt that by focusing on eye-tracking as a behavioural neuroscience method allowed us to talk about theoretical topics such as convergent evolution of nervous systems across different species in addition to replicating and validating previous research with a much larger and diverse sample size.

Installation and Pilot phase

During the first week of July 2017, “Surprising Minds” was installed next to the cuttlefish display tank in the Victorian Arcade of the Sea Life Centre in Brighton.

During July and August 2017, the exhibit was tended by a “host scientist”, who invited visitors at the Sea Life Centre to interact with the exhibit, explained the experiment embedded in the exhibit, and engaged in discussions about neuroscience topics such as optical illusions, blindsight, and the structure of the retina.

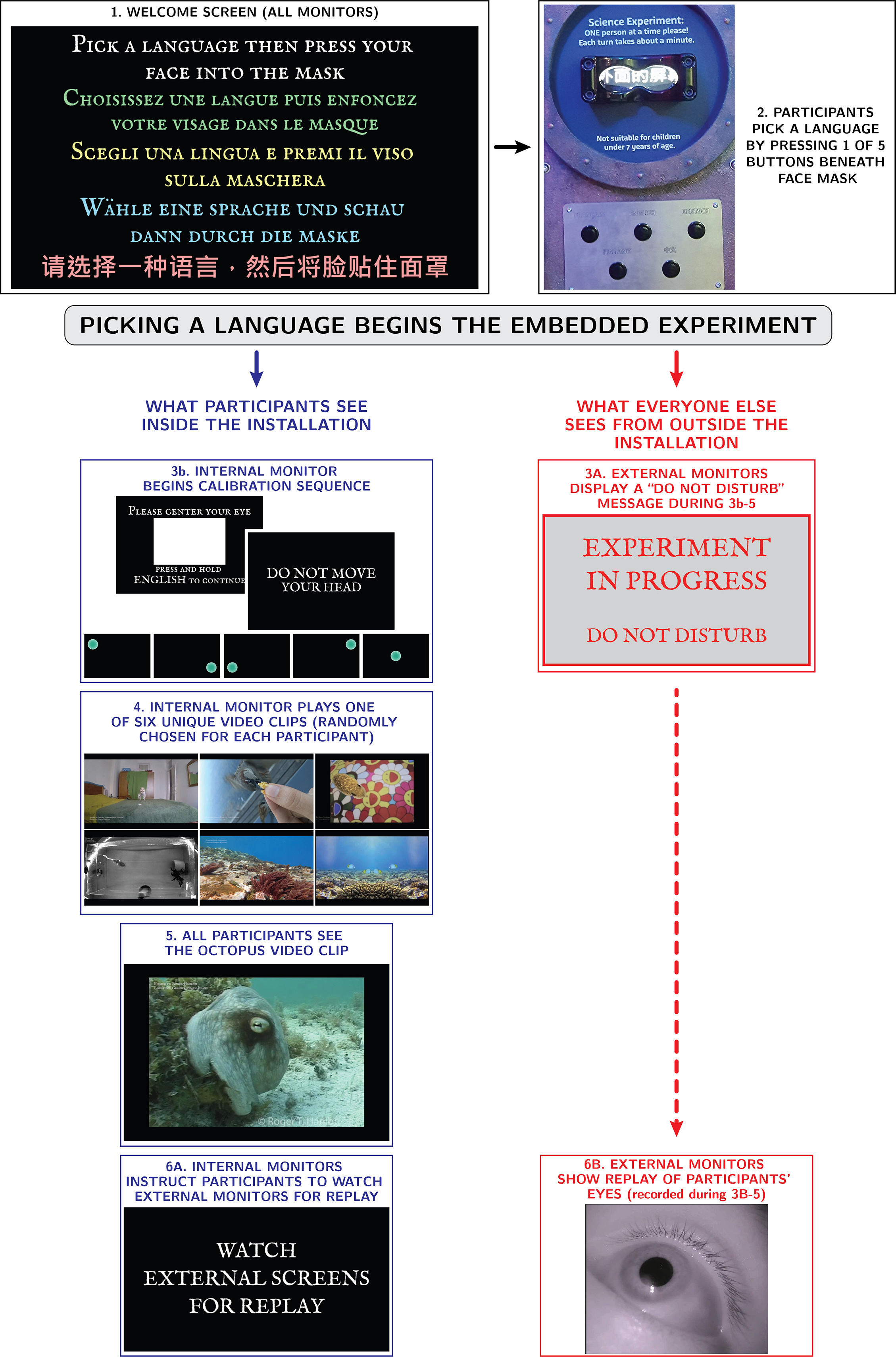

When visitors approached the exhibit, the host scientist invited them to look through the viewing plate and watch a 30 second long video clip while cameras record their eyes up close. Afterwards, the exhibit would replay the recording of their eyes so that visitors can see what their eyes do while they watch things.

See Supplemental Figure 1a for details of the exhibit casing that held the experiment, including the original design of the viewing plate.

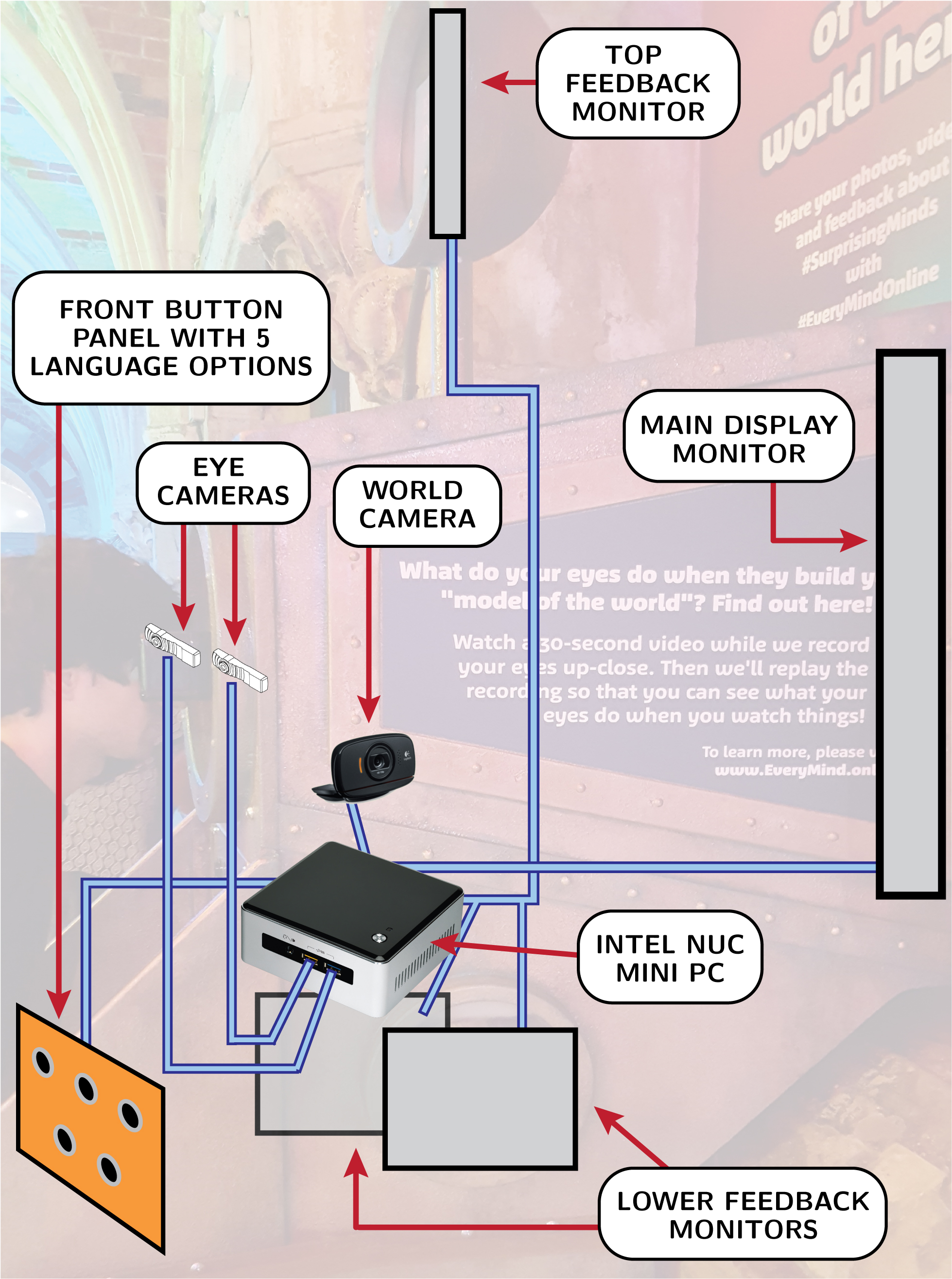

Every time a visitor activated the exhibit by pressing a language button, three cameras were activated - the two infrared “eye cameras” housed in the viewing plate, which recorded people’s eyes up close; and a usb web cam placed inside of the exhibit box, called the “world camera” or our “ground truth camera”, which recorded the main display monitor inside the exhibit box. At the same time, the external monitors on the top and sides of the exhibit box would change to display the text “Do not disturb, Experiment in Progress”.

See Supp. Fig. 1b for more documentation of the hardware components controlling the exhibit interaction and saving experiment data.

Automating the exhibit

After August 2017, the exhibit was briefly taken offline in order to enable the exhibit to run without a human host. The human host was replaced with informative text on the outside of the exhibit casing, and written directions for participating in the experiment were translated into 4 other languages besides English. See Supp. Fig. 1c and 1d for additional details on the upgrades made to the exhibit in order to automate the exhibit interaction and collection of experimental data.

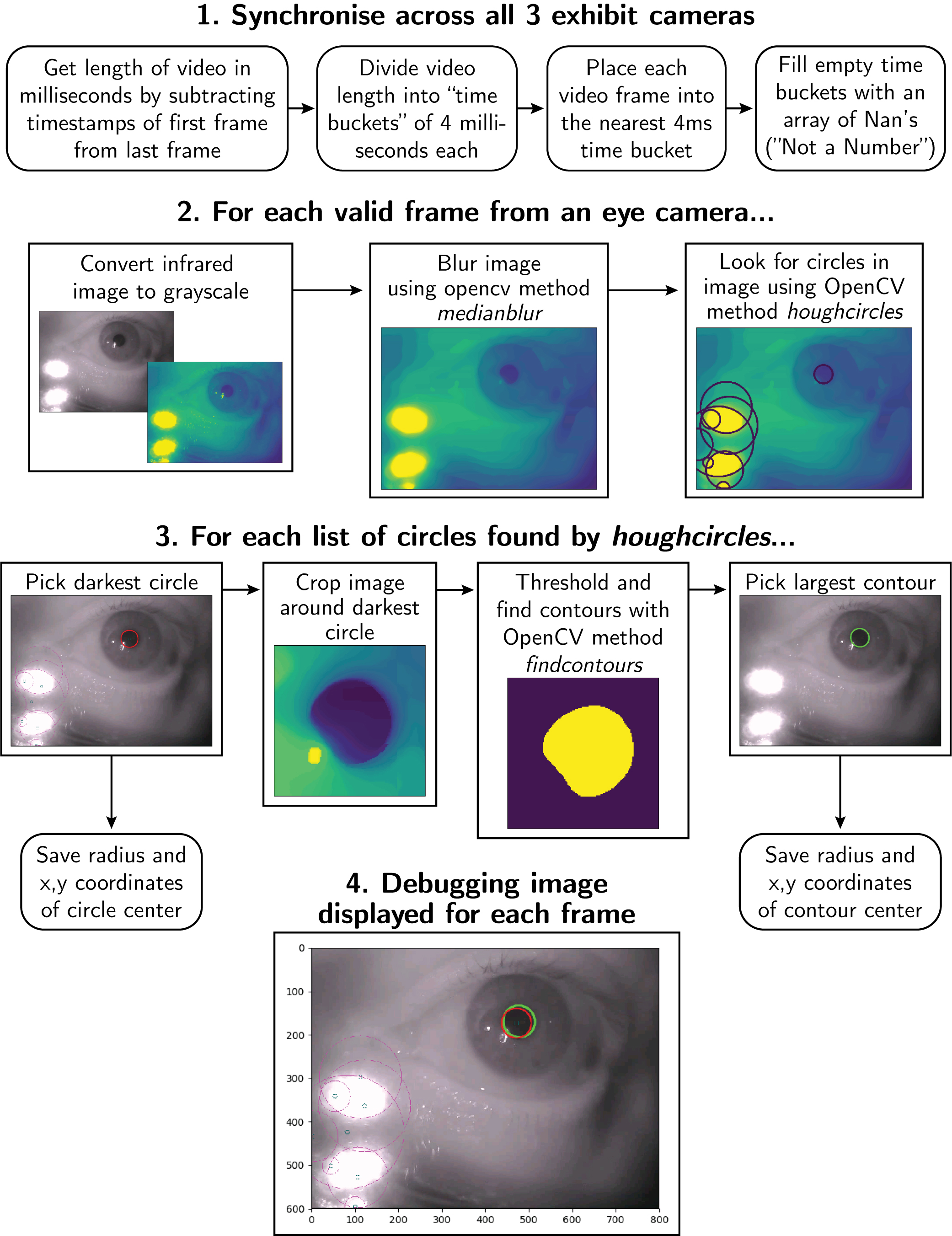

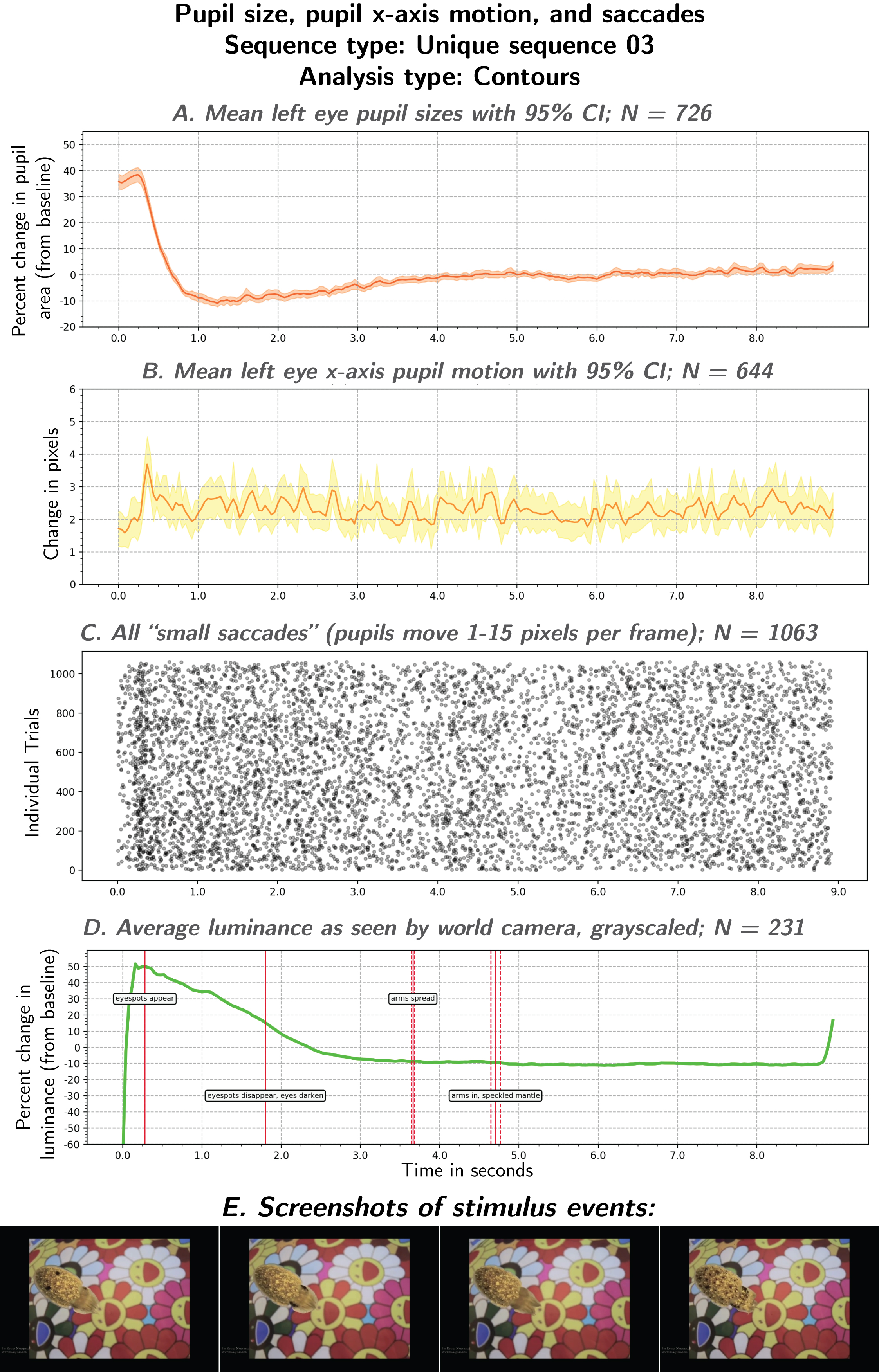

In order to analyse these videos, our analysis workflow needed to first synchronise all three videos, as the eye cameras and world camera ran at different frame rates. Then, for each frame in the eye recordings, our analysis workflow needed to find the pupil and measure the pupil’s area and the center of the pupil’s location in the frame. This information was then used to calculate changes in pupil size and motion.

Results

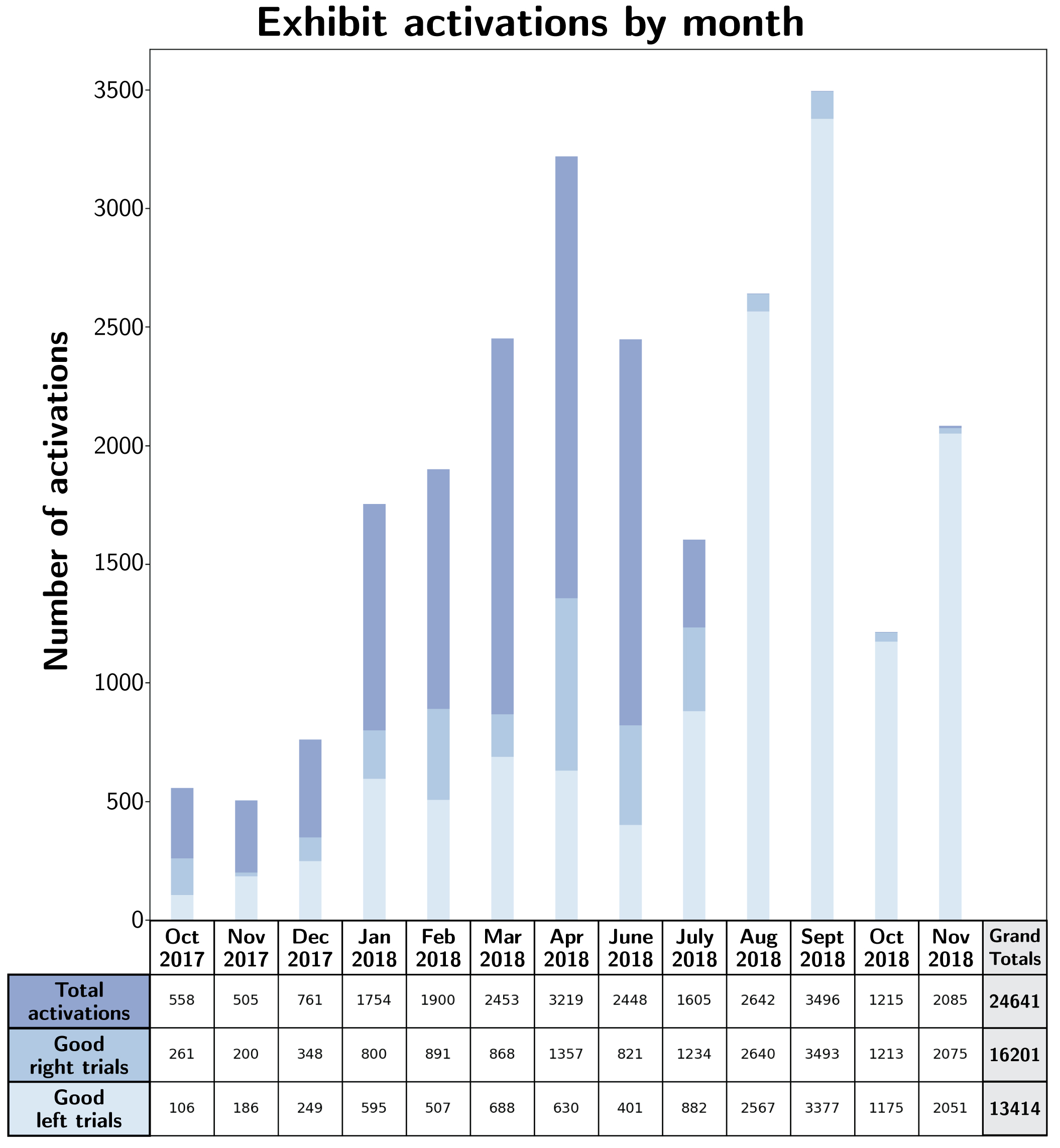

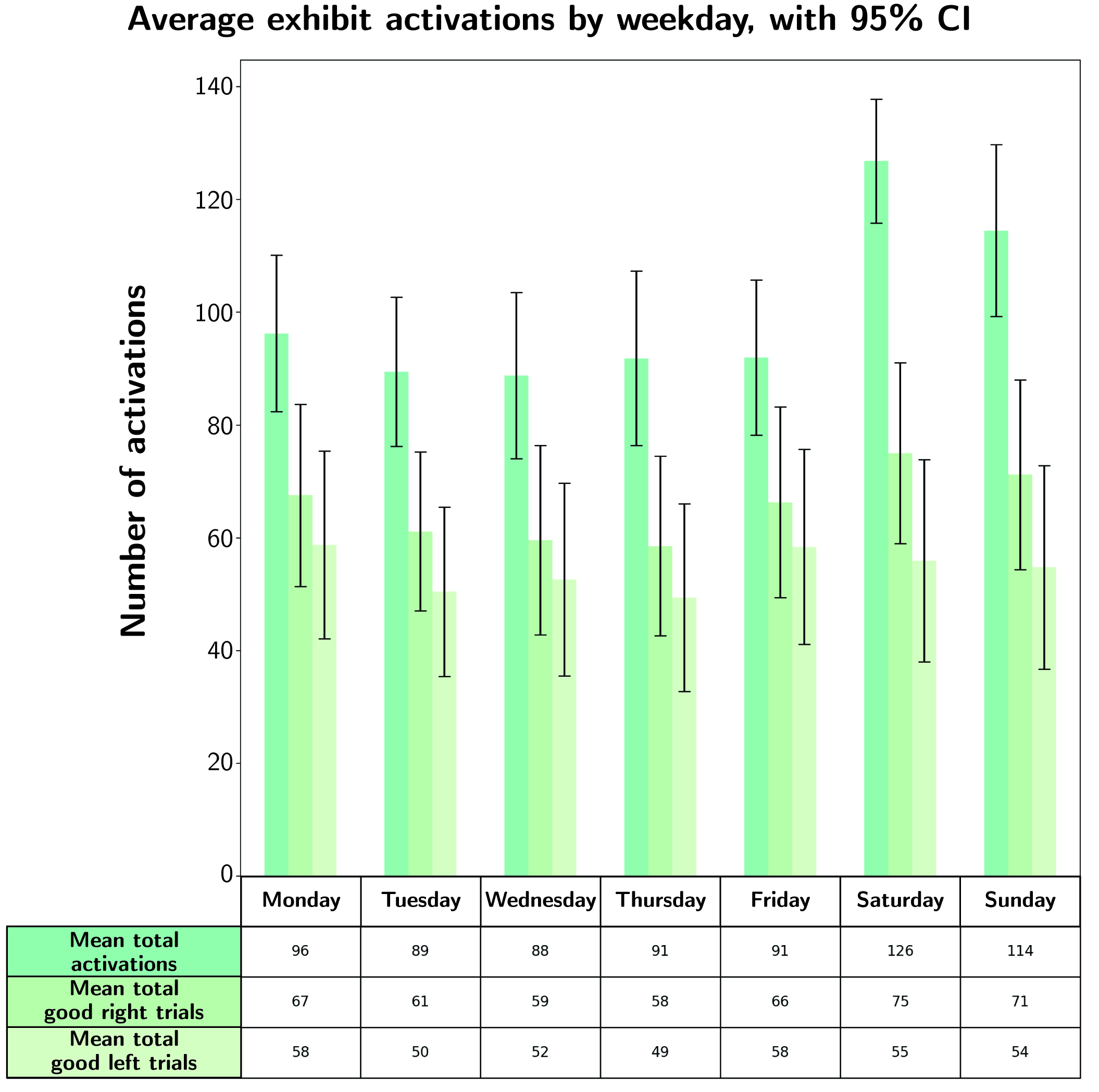

The exhibit turned out to be extremely popular. During the first month of automated data collection, the exhibit was activated 558 times, and by the time the exhibit had been running for a year, the exhibit had been activated a grand total of 24,641 times.

Not everyone who activated the exhibit successfully completed the experiment. In the engagement plots below, a “good trial” is defined by whether or not our analysis workflow was able to reliably find and track a pupil throughout the experiment. If a pupil was not found by our algorithm for a stretch of 100 consecutive frames or longer, the entire trial was thrown out.

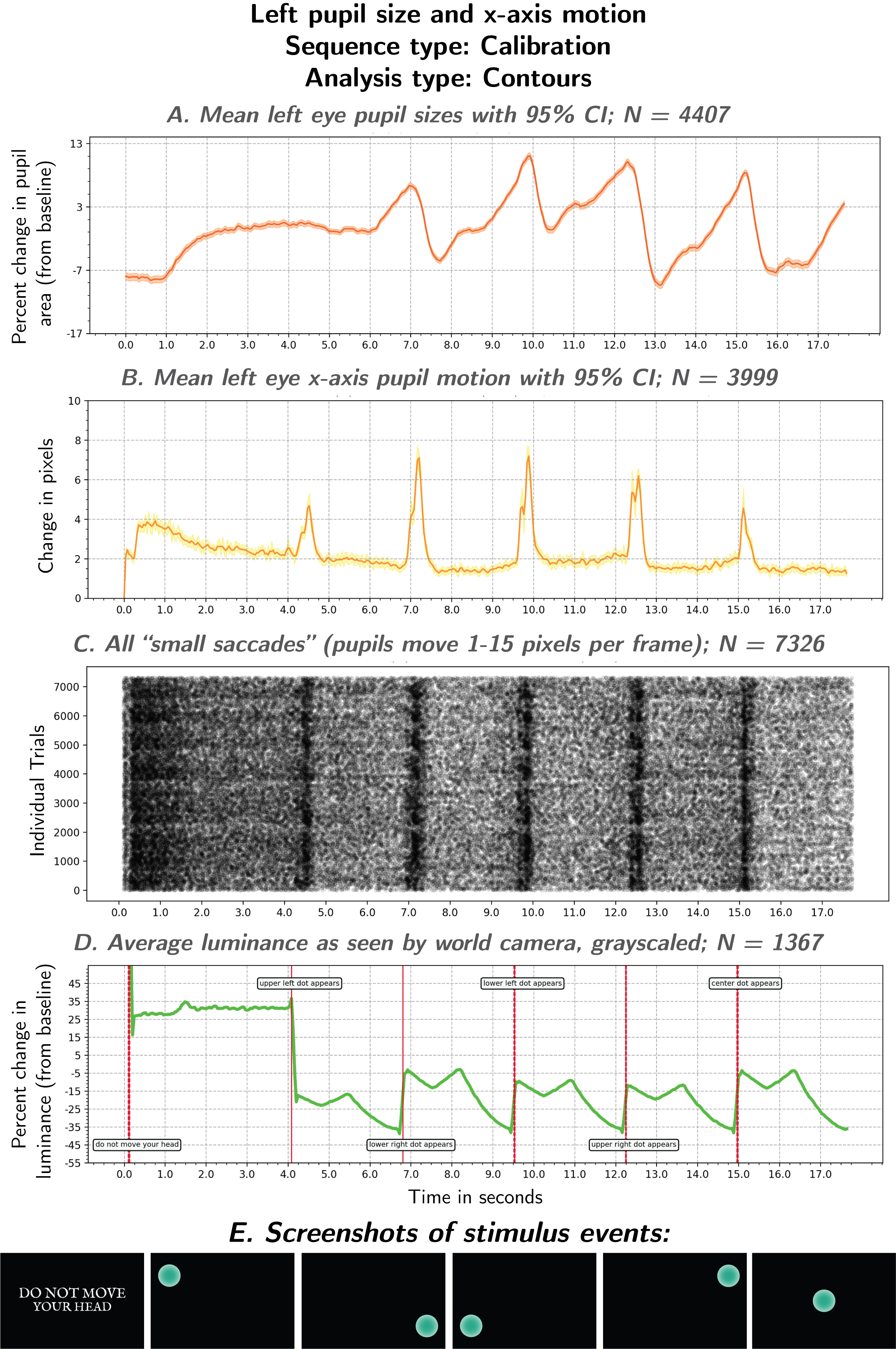

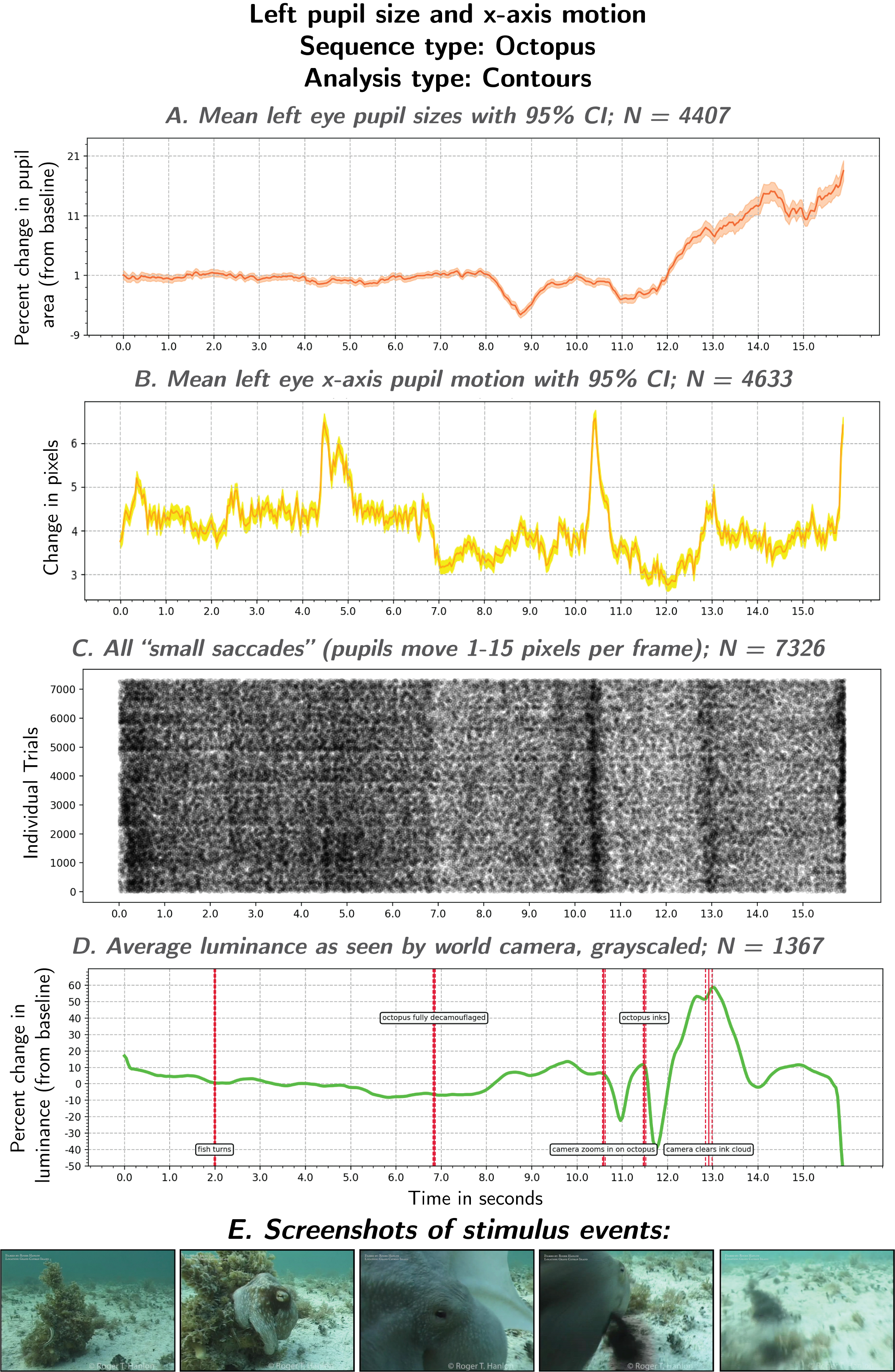

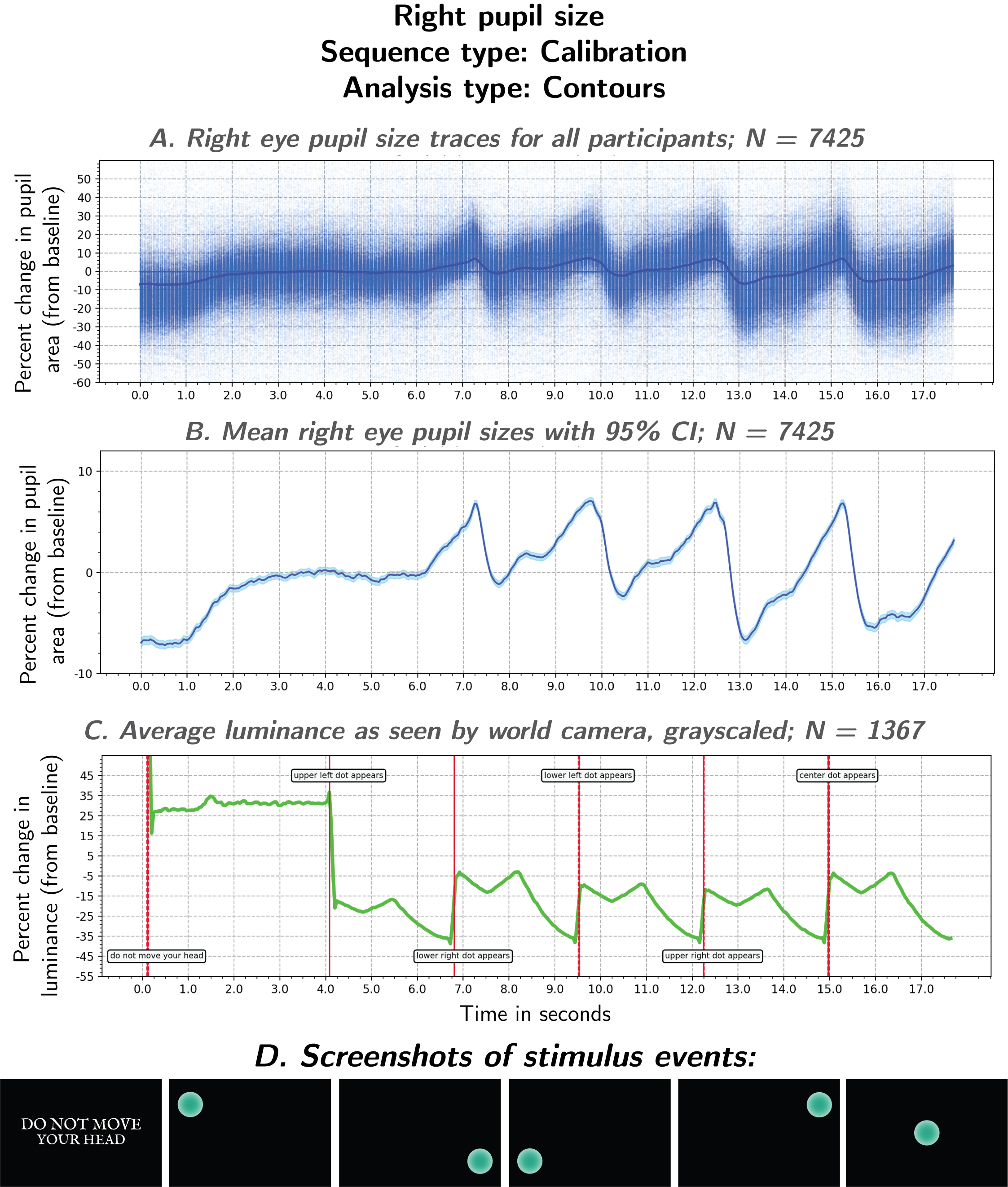

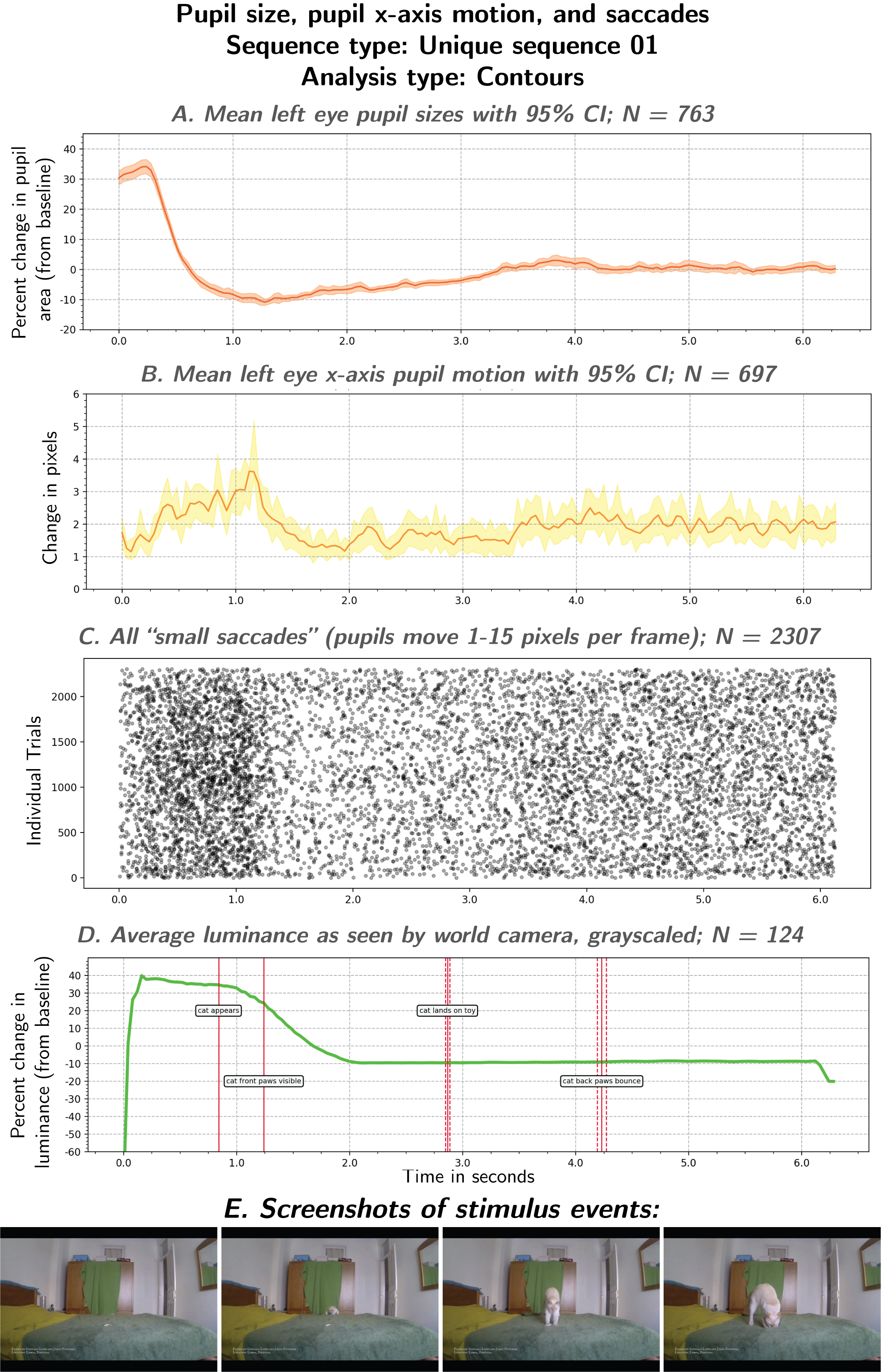

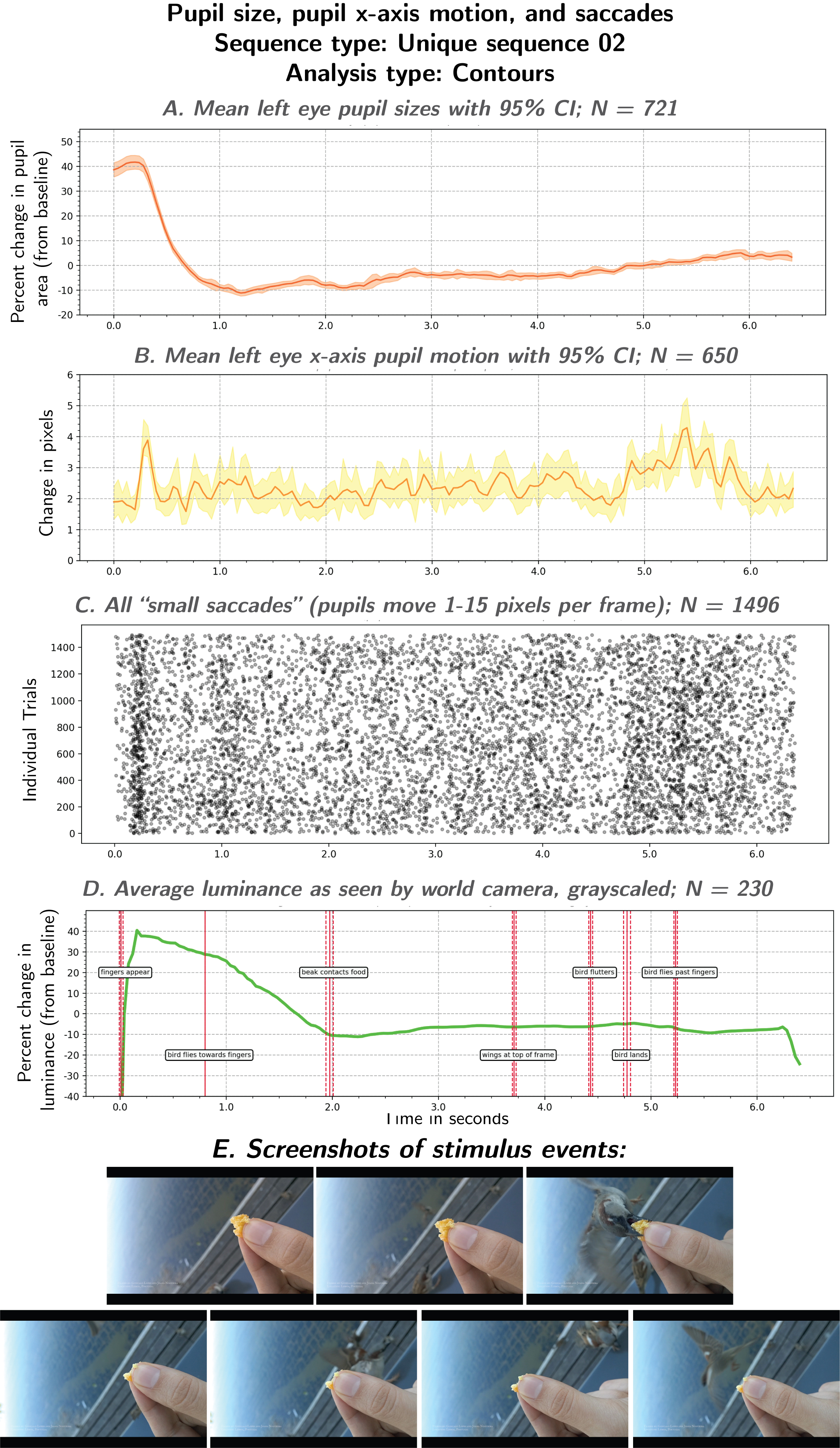

As shown in figure 3, each participant watched a calibration sequence, a unique video clip, and then a video clip of an octopus rapidly de-camouflaging. You can watch all six possible video experiences by scrolling to the bottom of this page, or by visiting the Surprising Minds Stimuli YouTube playlist.

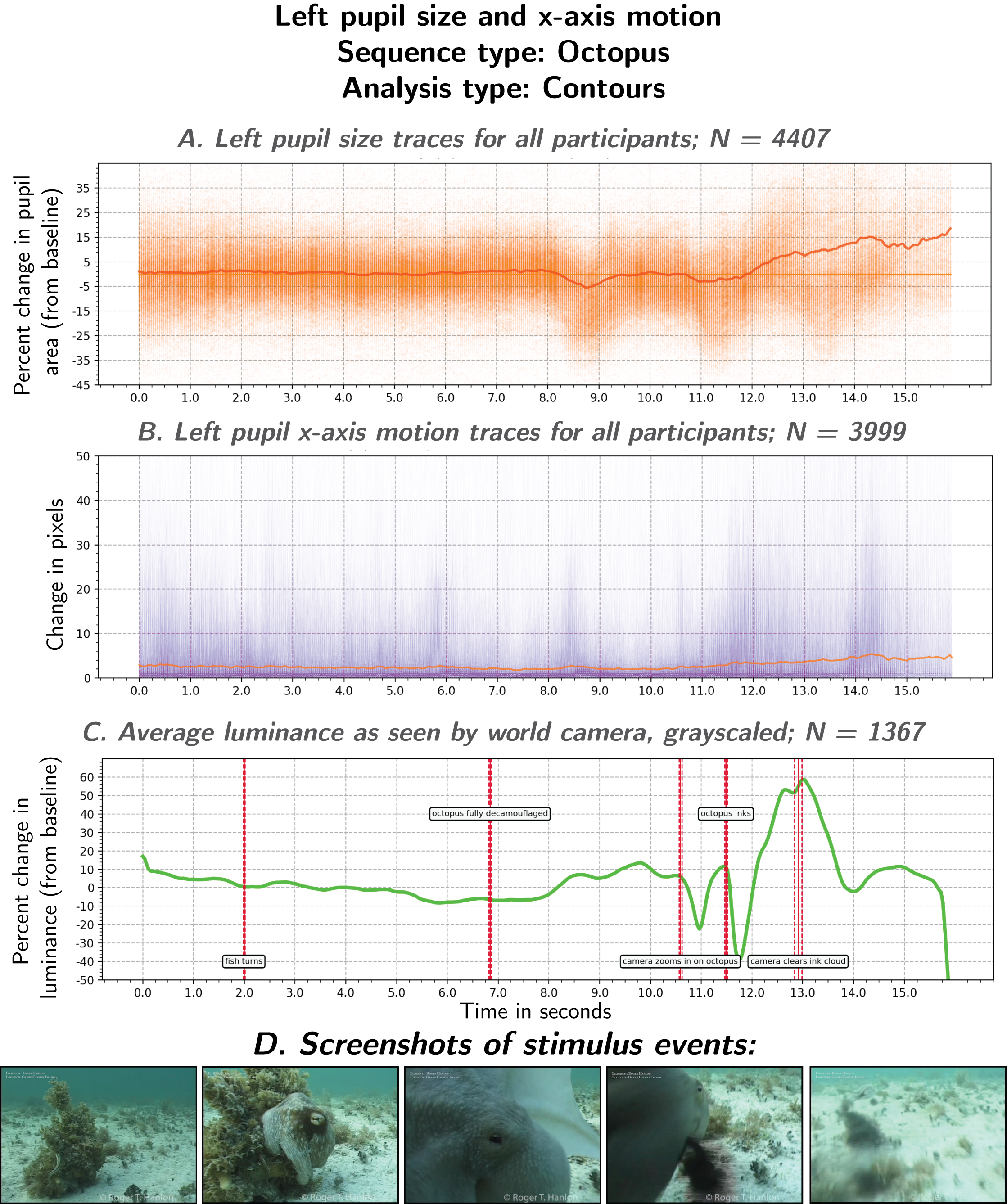

Because the calibration sequence and octopus clip was experienced by all participants, we pooled calculations of changes in pupil size and motion across all “good trials” obtained for these sequences. The plots below show pupil size and motion from all the good trials obtained with the left eye camera (N=4407).

Supplemental Materials

Supplemental Figures

Supplemental Videos

SV1: Video wall display

The Surprising Minds exhibit at Sea Life Brighton included a video summarizing the Cuttle Shuttle project and introducing the concepts behind Surprising Minds:

SV2: “Resting state” video

This video played on all external monitors of the exhibit when no one had activated the experiment yet:

SV3: “Please center your eye”

As soon as anyone activated the experiment by pressing a language button on the front of the exhibit, they were shown the view from the two “eye cameras” so that they could adjust the location of their eyes in the cameras’ fields of view. In these videos, the feed from the eye cameras were displayed in the white box in the middle of the frame:

English:

French:

German:

Italian:

Chinese:

SV4: “Do not move”

Once participants had centered their eyes in the field of view of the eye cameras, they were given one last reminder to keep their heads still, as this facilitates better eye tracking during analysis:

English:

French:

German:

Italian:

Chinese:

SV5: Calibration sequence

The experiment began with a calibration sequence, to help us benchmark the experimental setup and context:

SV6: Experimental stimulus sequences

After the calibration sequence, participants were shown one of six unique video clips. The experiment coordinating software (Bonsai RX) picked the unique sequence randomly. Afterwards, all participants watched the same video of an octopus de-camouflaging (video credit: Dr. Roger Hanlon, Woods Hole, USA).

Experimental stimulus 01:

Experimental stimulus 02:

Experimental stimulus 03:

Experimental stimulus 04:

Experimental stimulus 05:

Experimental stimulus 06:

SV7: Replay recordings from eye cameras

After the octopus sequence, all participants were instructed to watch the external monitors for a replay of the video recording of their eyes during the calibration, unique, and octopus sequences:

English:

French:

German:

Italian:

Chinese: